High-Tech Software House

(5.0)

Considering our previous post on accelerating the computer vision project, we wondered what would be a perfect choice of a predictive model for such a task. Typically, onboard computers on robots or even autonomous cars have limited computational resources due to battery draining, so the less energy we consume, the better for the system. We could realize these requirements in deep learning by deploying smaller models, but what about performance? What model would achieve satisfactory performance while meeting real-world requirements regarding inference speed and hardware requirements? These questions arose from a real need when discussing the ongoing project, and we decided to share our conclusions with you.

In the following post, we evaluated a group of deep learning models for the instance segmentation problem. Due to our previous experience and license limitations in the open-source world (e.g., famous YOLO from version 5 is not free for companies), we decided to focus on the entirely free-of-charge region-based methods with different feature extractors anyone can use, even in commercial projects. Moreover, we add a restriction that all tested methods must run on a reasonably-priced laptop GPU, Nvidia RTX 3060, with 6GB of VRAM that can be a part of the onboard robotic setup.

We hope that our post will let other engineers quickly find the optimal starting point for their vision projects.

Instance segmentation vs object detection

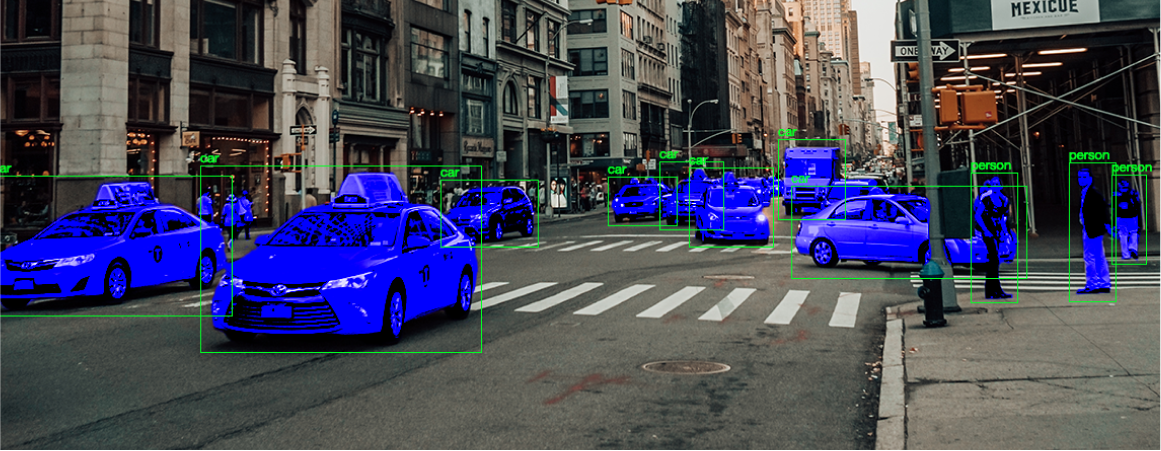

Let's clarify what we will do. Our task is called instance segmentation, in which we focus on segmenting individual objects within an image and labelling each pixel of the object with a class label. Instance segmentation is far different from object detection, which is detecting and labelling the presence of things in an image but not segmenting the pixels of each object. In other words, object detection produces a bounding box around each object in the photo, while instance segmentation provides a pixel-level mask for each object. That is precisely the task for Mask-RCNN (Mask Region-based Convolutional Neural Network), the model built upon a Faster-RCNN made for object detection with the additional module that produces pixel masks.

The following section briefly introduces the core algorithm used to obtain masks and bounding boxes working on different backbones for the instance segmentation model used in the evaluation. These backbones were initially trained on ImageNet, and the complete Mask RCNN model was fine-tuned using the COCO dataset.

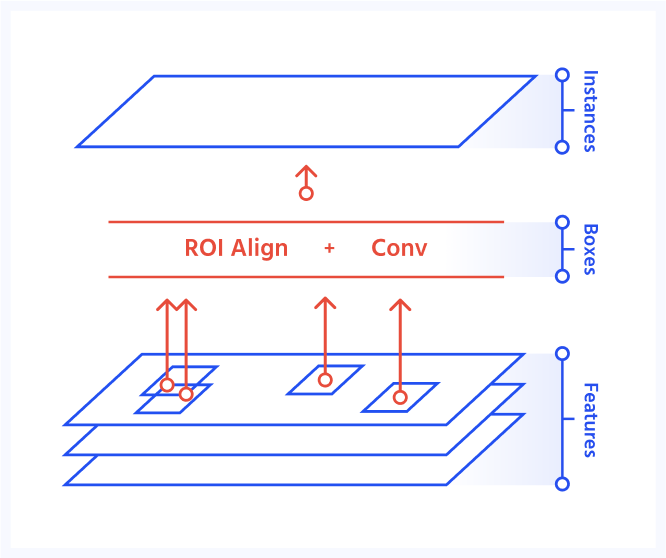

Mask R-CNN is a deep learning model developed for the instance segmentation task. It is a two-stage model that first detects objects in an image and then generates masks for each instance of the object. In the first stage of the model, a convolutional neural network (CNN) produces a set of bounding boxes and corresponding object class probabilities for the objects present in the image. In the second stage, the model generates a mask for each object by using the bounding box information from the first stage and applying a small, fully convolutional network (FCN) to the region within the box that produces a fixed-sized output (it prevents from collapsing a mask prediction into a vector). The resulting mask is then resized to the size of the bounding box and combined with the bounding box and class prediction to form the final output of the model. Mask R-CNN is a popular model doing instance segmentation and has already been successfully deployed in many applications, including object recognition, medical image analysis, and autonomous vehicle navigation. The following picture shows our test setup.

Backbones

ResNet (CVPR'2016)

A natural choice for a strong baseline is a ResNet 101 backbone, pretrained on a COCO dataset. ResNet stands for Residual Network and is a convolutional neural network developed in 2015. It’s a particular case of Highway Nets, originally introduced by Jurgen Schmidhuber. When the ResNet was published, the authors introduced skip connections, allowing the model to bypass some layers. This method is effective against vanishing gradient problems in very deep architectures and allows networks to combine features from shallower layers with deeper layers.

ResNeXt (CVPR'2017)

A continuation of work on ResNet was an architecture called ResNeXt, short for Residual Network eXtended. It also uses skip connections but differs in terms of capacity design. Instead of making nets deeper, the authors decided to increase the width of the network by using more convolutional layers in each layer (called cardinality), creating multiple paths.

ResNeSt (CVPR'2022)

The another variation belonging to the ResNet family. Similarly to ResNeXt, ResNeSt creates multiple paths of input using convolutional layers. However, this model is a hybrid of the convolutional neural network and attention modules because it applies a channel-wise split attention block on different layers of cardinals. In the end, the model concatenates produced feature maps.

Swin (ICCV'2021)

Another backbone used in our benchmark is Swin (Shifted window), a variant of a Vision Transformer (ViT). The concept of vision transformers originates from the famous Attention is All You Need paper and utilizes a multi-head self-attention layer, adopted initially to the NLP domain. To analyze an image less coarsely, as a ViT does use 16x16 px image patches, Swin creates hierarchical feature maps starting with 4x4 px patches and adapts a shifted window-based self-attention. It allows using Swin as a general-purpose backbone for recognition tasks.

ConvNeXt (CVPR'2022)

Despite successfully introducing transformer-based models to vision tasks, the research on convolutional-based models continued. The paper's authors bring incremental improvements in domain and ideas from training the transformer models and applying them to ResNet architecture. Some tricks are bigger kernels to enlarge a receptive field of layers, depthwise convolutions, replacing Rectified Linear Units activations with modern Gaussian Error Linear Units, using fewer activations and layer normalizations at all, and generally replacing Batch Normalization with Layer Normalization over the channel dimension. The results of those experiments are comparable to Swin on multiple benchmarks. ConvNeXt is an exceptional example of research where authors presented no novelty but focused on excellent engineering and the best possible utilization of existing blocks. They wrote in their paper: "(...) these designs are not novel even in the ConvNet literature — they have all been researched separately, but not collectively, over the last decade". That is a fundamental lesson for researchers to not blindly follow fashion (as Transformers dominated the field) but focus on solving problems.

Model and data preparation

In our experiments, we used packages from OpenMMLab open-source ecosystem. It’s a platform for developing and evaluating machine learning algorithms for computer vision tasks. The ecosystem includes implementations of the most popular networks with code for training, evaluation and deployment. Notably, the OpenMMLab team implemented multiple operations as plugins for the TensorRT library, enabling the deploying state-of-the-art models with even improved performance.

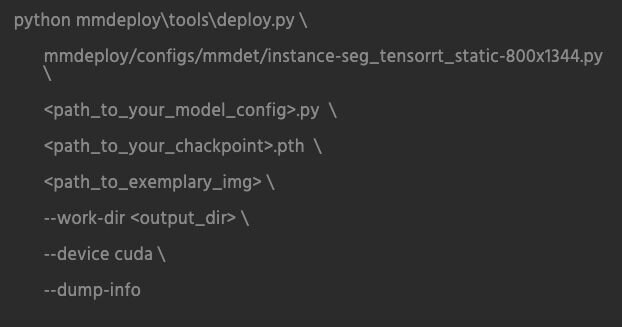

Each model was optimized using TensorRT for faster performance on our specific GPU. Run the following command to convert existing models from the MMDetection for the static graph with the input size 1344x800 using the MMDeploy package. These models automatically resize every input to the required dimensions. More on the procedure and deployment steps can be found on this link.

Test data come from the nuScenes dataset for autonomous driving. We selected 15 photos from the car's front, left and right cameras collecting data. It is few, but we do not validate the accuracy of selected models, and this number is enough to measure the inference speed and assess the overall quality of outcomes. The photos come in size 1600x900 px, and before passing them to the model are downsized to 1344x800. We define inference time as the inference using MMDeploy API, which includes data processing and eventual preprocessing like resizing.

Results

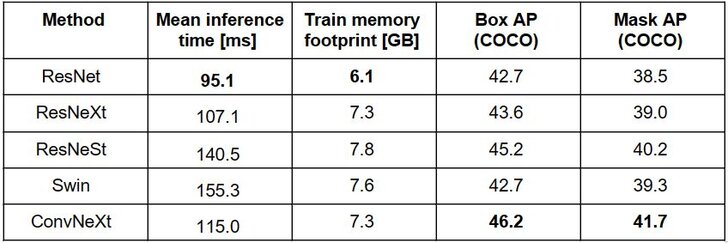

The following table contains Box Average Precision (Box AP) and Mask Average Precision (Mask AP) reported for the COCO dataset by the authors of MMDeploy. Generally, the mAP is the mean of the AP calculated for all the classes. For more information about mAP, see the following article.

The table also shows the results on inference time. The fastest backbone was the ResNet-101, with a mean inference time of 95.1 ms per image. Surprisingly, box AP and mask AP are on par with much newer Swin. However, Swin works over 60% slower, which makes him a bad alternative. An interesting choice for the instance segmentation would be only slightly slower ResNeXt with the mean inference time of 107.1 ms, pushing forward ResNet results. The best results in average precision achieved ResNeSt and ConvNeXt backbones, but the ResNeSt is the biggest model in the table. Nevertheless, ConvNeXt takes 0.5 GB less memory and achieves the best Box and Mask AP results. At the same time, the mean inference time is only 20 ms slower than ResNet, which makes it - in our opinion - the most profitable model in the following benchmark, with the best ratio between AP and inference time.

Exemplary results show the difference between our best model ConvNeXt and the baseline ResNet. In the picture below, one can notice that ConvNeXt tend to make the same mistakes as its smaller counterpart (e.g., does not notice a traffic light above the crossing) but finds more instances in the image, like more TV screens with advertisements on the building or a car leaving the parking. All these details improve the Mask and Box AP of the ConvNeXt model, while the inference time is slightly longer than ResNet. We find that model is an optimal choice for similar vision projects.

Instance segmentation involves object detection to identify and locate an object in an image. Then, the semantic segmentation model assigns a class inside the bounding box to the pixels which belong to the object. The following article contains a comparison of different versions of a Mask-RCNN algorithm. We briefly reviewed some of the most popular backbones, starting from a “golden rule” of ResNet and its modernized descendants through a transformer-based model Swin to a 2022 update for convolutional nets ConvNeXt.

In addition to theory, we compared the inference time of previously mentioned algorithms on selected photos from the nuScenes dataset - the dataset for autonomous driving. We used an OpenMMLab ecosystem to load models, convert them to TensorRT engines and perform the inference. Based on this, we selected a ConvNeXt approach as the best tradeoff between accuracy and inference time. There is also some visualization of the results.

See our tech insights

Like our satisfied customers from many industries