High-Tech Software House

(5.0)

A Large Language Model (LLM) is a type of artificial intelligence (AI) algorithm designed to recognize, summarize, and generate textual responses and other content types, processing vast datasets.

The technology has become popular thanks to tools like ChatGPT and Microsoft Bing. However, it still faces several challenges that could be significant hurdles on the path to widespread adoption. Generative services, whose training is based on internet resources, are associated with the risk of perpetuating and amplifying incorrect stereotypes and toxic content, unauthorized processing of sensitive data infringing on privacy, and spreading unverified information and fake news. In commercial or public sector applications, the data used to train the algorithm is carefully selected. However, when implementing AI systems based on LLMs, one must contend with hallucinations, which involve misleading users by providing false information. Typically, the form in which the response is constructed lacks awareness for the user that the information received may be untrue.

The LLM technology is based on an elaborate statistical model and essentially lacks the mechanism for strictly generating "true" texts. Instead, one could say that it produces texts with a certain degree of probability, as fundamentally, LLMs do not truly "understand" the text. Large language models recognize patterns based on training data. They are sensitive to input sequences and can provide different responses for slightly different questions. They lack the ability to reason or think critically in the same way humans do. Their responses are based on patterns observed during training.

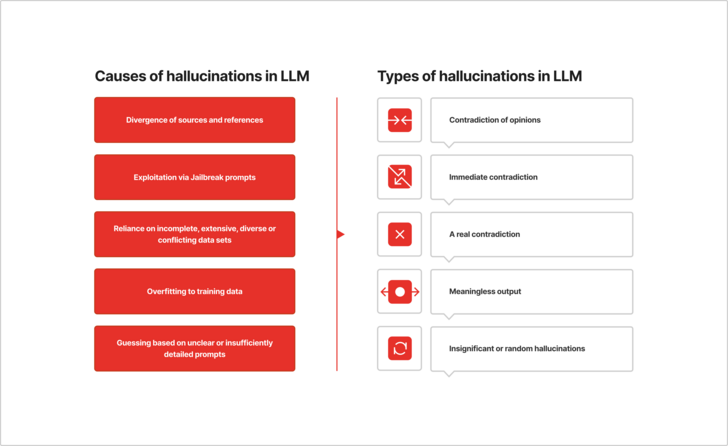

The graphic below illustrates the causes and types of generated hallucinations.

The example above illustrates a hallucination from ChatGPT-4, which responds differently to the same prompt (question) repeated three times. When asked, "How many people served as President in Poland between 1990 and 2010?" it provides answers: 4, 3, and 5, while listing names. In the responses, ChatGPT-4 mentions 6 different names of individuals who served as President of Poland, yet none of the three responses generated a complete list.

However, we can view hallucinations in LLMs more as a characteristic or property than an error. This perspective allows for a more objective assessment of this technology and a forward-looking approach to LLMs and tools built upon them, less critical and more open to development. Understanding the reasons and types of hallucinations enables experienced implementation teams to analyze and adapt appropriate solutions that will ensure as accurate results as possible - including through proper design of the customer experience (which also translates into maximizing customer satisfaction).

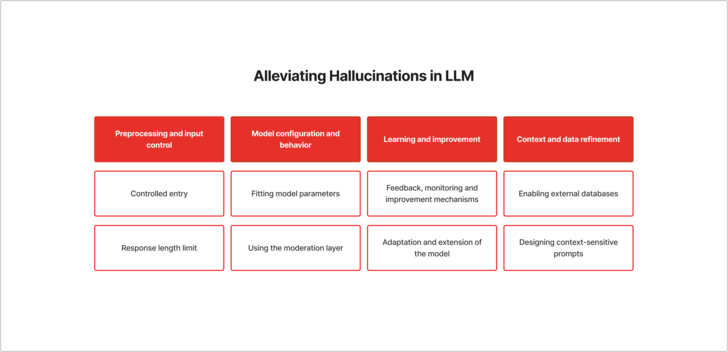

The areas and specific actions that can be taken in this regard are outlined below:

1.Pre-processing and input control:

2.Model configuration and behavior:

3.Learning and refinement:

4.Context and data enhancement:

The key to limiting hallucinations lies in the training process, which generally consists of two stages:

An example could be a project undertaken with a large nonprofit organization in the public sector in the United States. One component of the project is a tool (Agent LLM) designed for anyone to ask questions (prompt) regarding the state of the area under the responsibility of that institution, for example: "What were the regulations in sector X over the past two years and what was the average value of parameter Y?"

In this project, to prepare truth-aligned natural language responses simulating a conversation with an expert, a properly tailored model was proposed for the task, and additionally, 170,000 reports (each around 100 pages) in PDF format were indexed. This enabled highly precise responses to prompts. Furthermore, the full utilization deployment is planned in multiple phases, with the first being tests on 10 selected areas - gradual implementation of such tools within such massive organizations is key to success.

Practice shows that LLM deployment can be conducted in various areas of the public sector, where applied solutions and developed procedures drastically minimize unreliability (resulting in potential loss of trust and other consequences - particularly considering the social responsibility of such institutions).

Sources:

See our tech insights

Like our satisfied customers from many industries