High-Tech Software House

(5.0)

LlamaIndex (or GPT Index) is a user-friendly interface that connects your external data with LLMy (Large Language Models). It comes with a variety of tools designed to simplify the process, including data connectors that can integrate with various existing data sources and formats such as APIs, PDFs, docs, and SQL. Additionally, LlamaIndex provides indices for your structured and unstructured data, which can be used seamlessly with LLMs.

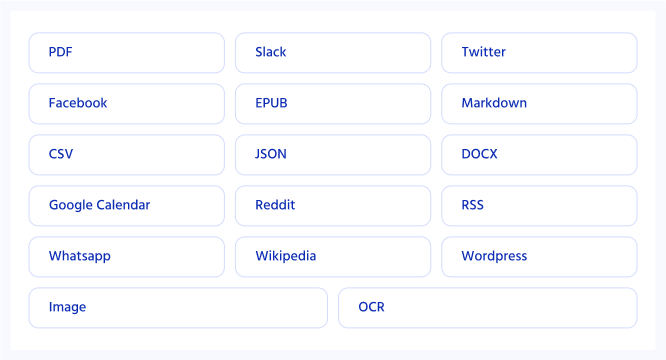

The most popular formats and applications from which LlamaIndex indexes. You can find the full list here.

The name "LlamaIndex" comes from the fact that the index is based on the idea of a "llama curve." This curve represents the relationship between the complexity of a language task and the size of the language model required to perform that task with a certain level of accuracy. The idea is that as the complexity of the task increases, so does the size of the model required to perform it well.

The LlamaIndex has several potential applications. For example, it could be used to guide the development of new language models by identifying the tasks that are most challenging for current models and targeting improvements in those areas. It could also be used to compare the performance of different models in a specific domain or language, helping to guide the selection of the most appropriate model for a particular task. However, the most important thing (at least from the point of view of this article) is that the GPT Index works perfectly if we want to use the power of LLMs for organizational purposes.

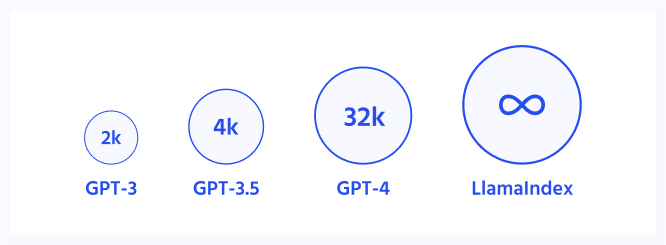

Why do we need more than "pure” ChatGPT for commercial use? Main reason is that ChatGPT itself accepts too few token*:

*1,000 tokens is about 750 words

Number of tokens accepted by GPT-3, GPT-3.5, GPT-4 and LlamaIndex

With that amount of tokens, it’s not possible to put larger datasets into the prompt - due to these limits, a large part of the operations cannot be performed. It’s true that it’s possible to train the model but it has some downsides (and upsides, but we will write another blogpost about it soon to compare those solutions). This is where LlamaIndex comes to the rescue. It allows you to index any data sets (documents, pdfs, databases and more - you can check supported formats here) and query them according to your needs. So:

And imagine getting answers powered by the power of reasoning of GPT, all within seconds, without even having to paste anything into prompts.

Thanks to LlamaIndex, you can not only get answers from the data set, but also information from which documents they come from. This turns out to be very helpful in obtaining information, further work on the data and drawing conclusions.

All this can be achieved with the proper implementation of GPT Index! How? In the next part of this article we will present our thoughts on the implementation of such a solution - based on a real case-study of our clients.

Before we can ask questions in natural language and get satisfactory answers, we need to index our datasets. As said before, LlamaIndex is able to index almost anything (and with GPT-4, multimodal indexing is coming soon). During the implementation of LLMs for our clients, we drew attention to important aspects that we want to share with you, namely: indexation cost, indexation time (speed) and types of indexers.

Indexing cost

What is the cost of indexing? As we pointed out at the beginning of the article, apart from the number of tokens, it is the issue of costs that makes us turn to LlamaIndex. This is important because we assume indexing of huge datasets. We have been able to index tens of thousands of pages for our clients.

Currently, the cost of indexing is 1 cent per page.

You can find prices for individual openAI models.

Indexing speed

The second important issue is the time of document indexing, i.e. preparing the entire solution for operation. According to our experience, the indexation time is not the shortest - but it is one-off. For example:

On the other hand, huge datasets, such as 100,000 pages, will need more time, about a few days - the exact time is hard to estimate due to the server issues mentioned earlier.

Types of indexers

When creating a solution based on LlamaIndex, we considered two different indexers. For some tasks, precision will be the most important issue, while for others it will be time. Below is a comparison of the two indexers:

GPTTreeIndex/GPTListIndex - when you need precision ~163 seconds for indexing 40 pages document. GPTSimpleVectorIndex - when the speed is most important ~13 seconds for indexing 40 pages document.

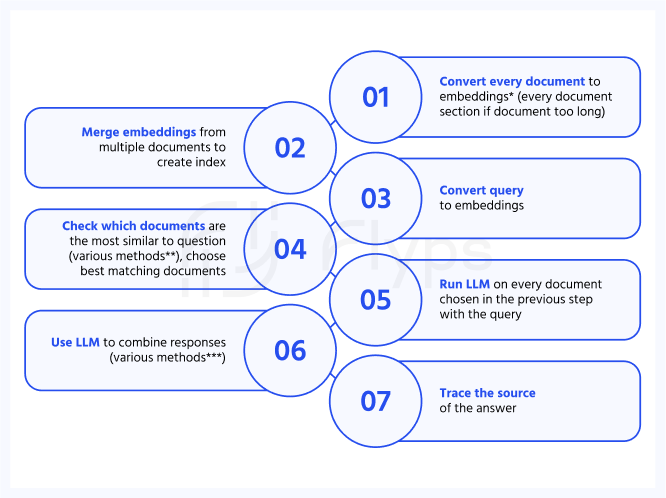

How does the LlamaIndex algorithm work? What do the different stages look like? Below is an infographic explaining the whole process. The whole thing is built on the basis of experience in LLMs projects that we build for our clients.

The stages of LlamaIndex (GPT Index) algorithm

*Embedding is a vector of numbers which represent a word or phrase. The vectors are created in such a way so that words with similar meaning have similar embeddings. Conversion of words to embeddings is done by a neural net and is the first processing stage in LLMs.

**Matching document with query methods:

by embeddings’ similarity (don't require LLM for matching)

by keywords (don't require LLM for matching)

by documents summary (requires LLM for matching, summaries can be added manually or generated by LLM during document indexing)

***Methods of combining responses:

List: creating the response by the first document, refining the response by subsequent documents

Vector: creating the response for every document, using LLM to crate one response based on the responses from all the documents

Tree: creating responses by 2 documents, using LLM to join responses from 2 documents into one, redoing this for multiple document pairs in a tree structure.

Available models

The default model used is: text-davinci-003. The interface can be built to practically any LLM. There is also a large list of models that have interfaces already implemented. The full list is available here.

Our experience in building solutions based on LLMs is based on customer needs and the problems they come with.The main problem is the huge data stack. The amount of information that organizations have or use is increasing day by day. Often there is a loss of control over the information the company has and so it cannot be put to good use. Applications based on LlamalIndex come with help, which allows organizations to use all this information to conduct analyses and draw conclusions and insights.

Check out the applications built on LlamaIndex.

You’re still not sure how your organization can use applications running on LLMs? What benefits can be drawn from it? Here’s a list of use-cases of various organization that may inspire you:

Since the spread of AI technology, the race to use LLMs has begun. The powerful possibilities of such solutions (if correctly implemented) give a significant advantage on the market. Soon, they will simply become “to be or not to be” for many organizations.

Want to try LLMs with Flyps?

You can send us a set of documents and questions you want to ask and we can send back what answers the LlamaIndex will generate using different LLMs and different types of indexes. We can also prepare a cost analysis of individual sets of solutions

See our tech insights

Like our satisfied customers from many industries