High-Tech Software House

(5.0)

If you follow our blog, you might remember that we began our SLAM article with a small pun. We wanted to think about something connected to the YOLO we know and love from pop culture and Drake songs, but we decided not to. There’s just so much to talk about the YOLO we have in mind today! You Only Look Once is a state-of-the-art algorithm for Real-Time Object Detection systems, that’s redefining computer vision and its possible uses. Unfortunately, we can’t cover all of it in just one article, that’s why we’re dividing it into parts! Today, we’ll take a look at what exactly is object detection, how object detection was handled before the invention of YOLO, and what were obsolete methods of handling object detection such as CNN, R-CNN and sliding window object detection. Object detection algorithms, including YOLO naturally, are an incredibly complex topic, that’s why we’re dividing this article into parts.

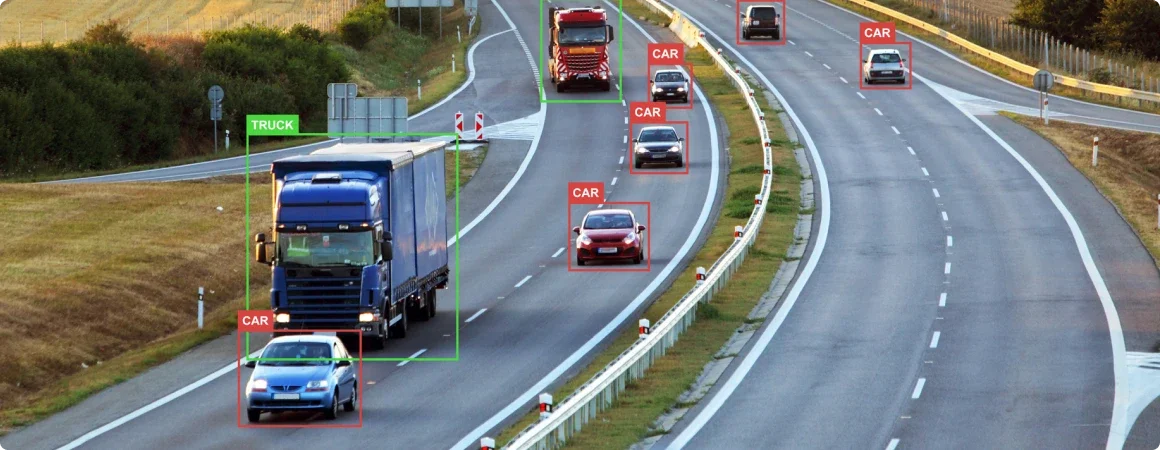

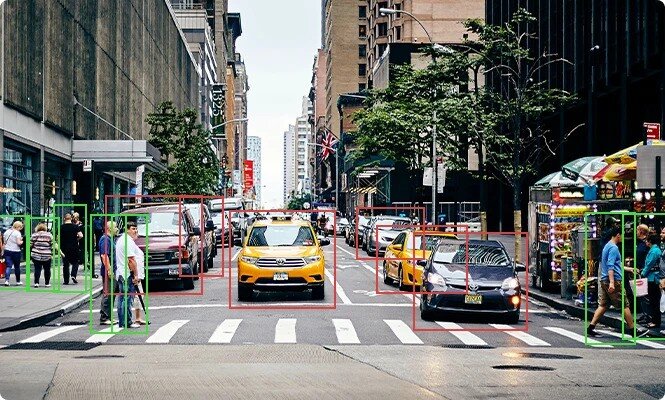

We, as humans, utilize an array of highly specialized senses. The sense of smell and taste, subtle senses of touch and arguably the two we most depend on, hearing and seeing. With the development of artificial intelligence and intricate robotics, we started to think, what will happen if we give vision to robots? Think of all the incredible things that could be achieved with that! Vehicle and pedestrian detection on busy streets can help immensely with road safety and lower the number of vehicle accident casualties. With the use of object detection in agriculture, farmers can deploy computers to count and analyze the number of animals in an area, and quickly assess the quality of the product, allowing for more efficient and convenient farming. Naturally, object detection can also be used for security. Imagine a camera equipped with a complex computer vision algorithm, placed somewhere in a train station. With that, it could give an immediate signal to the security guard, or voice the alarm, as soon as it sees someone trespassing on the train tracks. Object detection also facilitates great growth in the medical field. It can make analyzing samples and diagnosing illnesses much quicker and optimized. And as we all know, very often when it comes to our health, time is of utmost importance. That's how the idea of object detection came to be in the field of computer vision. Its essence is already in its name: object detection is a highly specialized computer vision task used to detect and identify objects seen through a camera. Simply put, we ask the computer “what objects are where?” and “what do you see?”.

What are the old methods of object detection?

YOLO is one of the newer and most complex methods of object detection, but we wouldn’t be able to get to it, if not for the methods used previously. The three most commonly used methods of object detection were:

- CNN

- R-CNN

-Sliding window object detection

Let’s take a closer look at them, shall we?

MIT Press published a journal named “Neural Computation”. In there, we can find a riveting article “Deep Convolutional Neural Networks for Image Classification: A Comprehensive Review” that provides us with a stellar definition of CNN. “CNN's are feedforward networks in that information flow takes place in one direction only, from their inputs to their outputs. Just as artificial neural networks (ANN) are biologically inspired, so are CNNs. The visual cortex in the brain, which consists of alternating layers of simple and complex cells, motivates their architecture. CNN architectures come in several variations; however, in general, they consist of convolutional and pooling (or subsampling) layers, which are grouped into modules. Either one or more fully connected layers, as in a standard feedforward neural network, follow these modules.” It’s a truly fascinating paper, written by two South African researchers, Waseem Rawat and Zenghui Wang.

R-CNN is a complex algorithm that uses CNN through one of the stages, with a focus on object detection. They stem from the same source, with R-CNN working strictly on detecting objects, using the convolutional neural network structure.

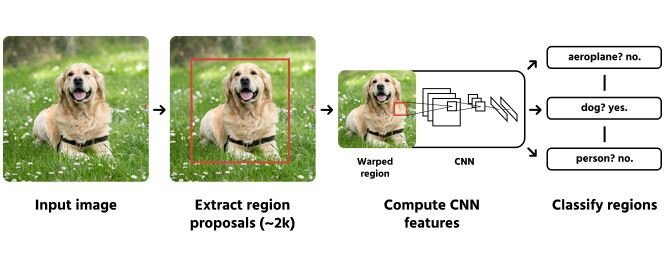

R-CNN stands for Region-based Convolutional Neural Network. It’s a group of machine learning algorithms used in image processing and computer vision. They were designed specifically to detect objects in any input image defining boundaries around them. How does R-CNN work? We take an image we want to analyze and put it on a selective search algorithm to extract region proposals. Thanks to that mechanism, we can extract information about the region of interest, represented by rectangle boundaries called bounding boxes. Depending on the source image, there can be over 2000 regions of interest. Each region of interest is then put through CNN to produce output features. These features go through the Support Vector Machine (SVM) classifier. That allows us to classify the objects presented under the regions of interest.

Here you can see how the procedure of an R-CNN looks while detecting an object. By using the R-CNN ew extract regions of interest using the region extraction algorithm. Authors initially were able to gather up to 2000 proposals. For each one of them, the model manages the size to be fitted for the CNN. CNN then computes the features of the region and SVM classifiers classify what objects are presented in the region.

What’s faster than R-CNN? Fast R-CNN of course! The major problem with the computing speed of R-CNN is the need to run the algorithm up to 2000 times per image. If we could only run the CNN once per image to get all the regions of interest at once, we could save up an incredible amount of time.

That’s when the author of R-CNN, Ross Girshick invented the Fast R-CNN, which accomplishes exactly that. We feed the input image to the CNN, which then generates the convolutional feature map. The region proposals are extracted by using this map. Finally, we use a RoI pooling layer to fix all the proposed regions into one size, so that we can input it into a fully connected network.

Sliding window object detection

The sliding window is one of the approaches for object detection. The task is based on a binary classifier, that takes the part of the image which overlaps with the window and determines whether the object fits the classifier or not. After that, you repeat the process by sliding the window, until it goes through the entire image. It’s a common brute-force approach with many local decisions.

What’s important to keep in mind, is that you cannot feed the raw image window into the classifier. The classifier makes a decision based on features extracted from the image window. The most important component of this approach is the way we extract the features. There are many feature extraction methods and feature representations, some of the most common ones being:

- Pixel-based representations

- Color-based representations

- Gradient-based representations

One of the representative feature descriptors with gradient-based representations is commonly referred to as HoG. Histograms of Oriented Gradients are often used to extract strong features for object detection.

As we mentioned in the opening of this article, the history and specifics of object detection is an incredibly complex topic, requiring a lot of in-depth research and understanding. Not to overload your senses, we’ll come back to the topic in the next part of this article. Stay tuned to learn exactly why YOLO is better than the methods we mentioned above, and how it revolutionizes the world of object detection. We’ll talk more in-depth about how YOLO works and how it was created. Is there anything else you’d like to learn about object detection? Maybe you’ve been working on a project focusing on it? We would absolutely love to exchange our experience with you, and hopefully learn something new, as well as have an opportunity to teach and offer support! Please, feel free to write to us using our contact form or through the [email protected] e-mail.

See our tech insights

Like our satisfied customers from many industries