High-Tech Software House

(5.0)

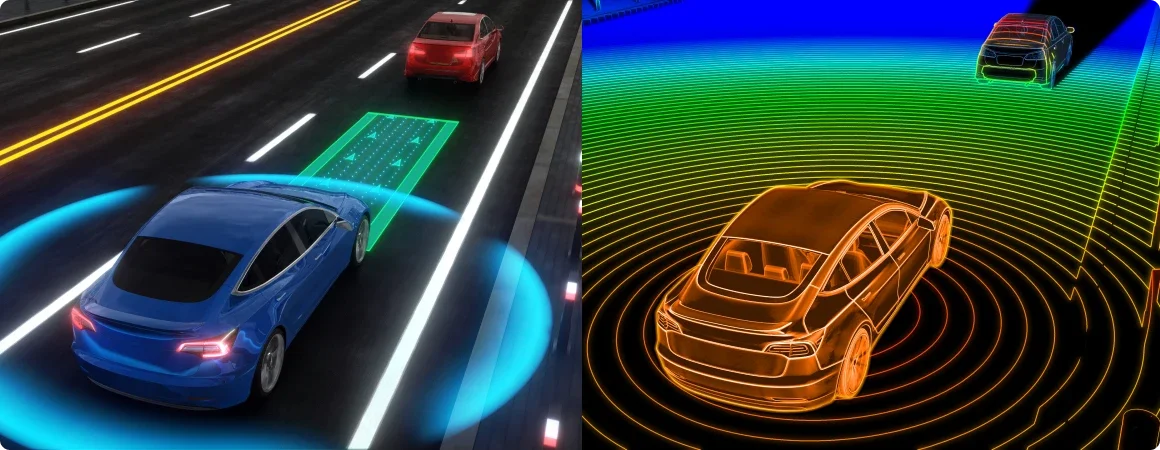

Nowadays, autonomous robots are becoming more and more present in our daily lives. They are used in various economic sectors and are undoubtedly the future not only of robotics but also of industry. But sometimes, confusion arises from the definition of the robot itself - what actually is a robot? Simply put, a robot is a machine that performs various tasks for us. Note that the definition applies not only to complex, high-tech human-like robots that do difficult jobs (like the famous Atlas | Partners in Parkour) or autonomous cars but also to a whole range of much simpler autonomous vehicles like vacuum cleaners or drones. If you want to know a bit more about this, read our previous post Challenges in the real-world deployment of an autonomous robot.

And how do automated robots work? Their phenomenon is that they do not need constant control, so they can perform the tasks entrusted to them without constant supervision autonomously. However, for this to succeed, they must be able to navigate around the area in which they operate. For the purposes of the following post, let's focus on two main questions that arise when the robot navigates autonomously:

1. Where am I (state estimation)?

2. What is the environment (mapping assignment)?

An important thing to understand is that these two questions are tightly coupled because they depend on each other. To answer the localisation (state) question, we need to know the map, but to build that map, we need to know the location. Researchers to solve that chicken-and-egg problem refer to Simultaneous Localisation and Mapping (SLAM), which consists of a large collection of methods creating a whole robotics framework.

As mentioned earlier, this blog post will focus on mapping and localisation. Mapping is a process of collecting sensor data and modelling the environment. Two types of maps are distinguished: volumetric maps, which contain geometric information, and feature-based maps, which contain a description of detected key points. Localisation, on the other hand, involves estimating the current state of a robot based on noisy sensor measurements and control commands. To compute these quantities, we use observational and motion models that allow us to apply Bayes' famous rule to determine the most likely state of a robot agent. We also distinguish between the full SLAM, which aims to estimate an entire path, and the online SLAM, which focuses on the last pose. In the following paper, we focus on explaining to the readers the core concepts of the SLAM framework - localisation and mapping, which we have already mentioned.

In real-world scenarios, sensor measurements are noisy, so when the robot moves, the uncertainty of its pose grows even more. An incorrect map leads to misleading localisation, and the loop closes. That is why SLAM is both problematic and necessary.

When reading the following article, some complex, domain-specific terms might be unclear for readers less familiar with the subject. To be on the same page, let's start with a gentle introduction to the problem.

A robot is an agent that goes through the environment. It performs a state estimation, which is a process of estimating its position, orientation, landmarks' position, etc., based on noisy sensor data and control commands. We assume the localisation assignment is closely related to state estimation being a part of it, in which we estimate a robot's pose (position + orientation) on a map. Mapping in robotics is registering the sensor data and creating the environment's representation. Finally, SLAM is a joint process of localisation and mapping at once. Imagine we have a sensor that gives us the distance to the nearest obstacle. Having the localisation set up correctly, we can place the sensor readings on the map; however, when the localisation is uncertain (what is always true in the real world), it affects the mapping process. On the other hand, lousy mapping leads to uncertainty in the localisation procedure.

Typically, a localisation assignment assumes that the agent has a prior map of the environment in which it will operate because, in the end, it needs to find its pose within some neighbourhood. As we mentioned earlier, the sensor data is noisy, so as the robot moves, the uncertainty of its localisation grows over time. To model the uncertainty in robotics, we do not assume we know exactly where a robot is. We use probabilities that say a robot is at some point with some uncertainty. These uncertainties are in the robot's motions and sensor measurements. Summing up, probability theory enables us to model uncertainty directly.

A family of so-called Bayesian filters is probably the most popular and widespread method for online state estimation. The word "online" means that the algorithm updates the current state using the most recent observations and control commands. It includes algorithms like the Kalman Filter, Particle Filter, discrete filters (e.g., histogram filter), or information filtering (which is basically a Kalman Filter in the information space). They all follow the same structure and equations to estimate the state. However, they hold different assumptions.

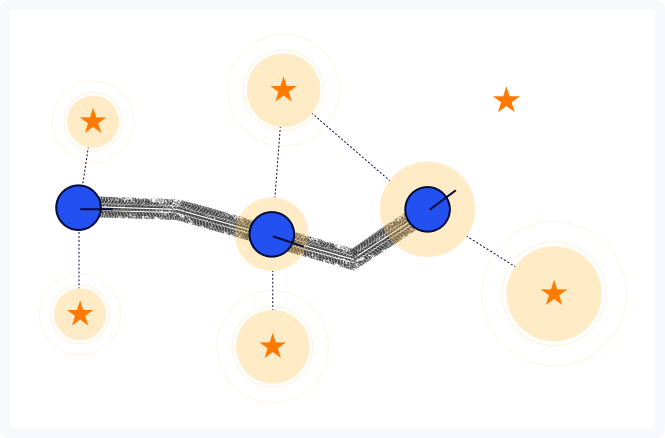

The example of the Bayesian localisation framework working on Lidar scans with known maps. As the robot moves, the algorithm estimates the most likely current state. Green dots represent Lidar scans aligned with a known map (white dots). Based on the work with online code available at GitHub

Generally speaking, given measurements and control commands, such a filter estimates a current state of a dynamic system using a conditional probability. The filter recursively updates a robot's belief about its state (xt), given noisy sensor measurements (zt). Typically, Bayesian Filter uses two types of models:

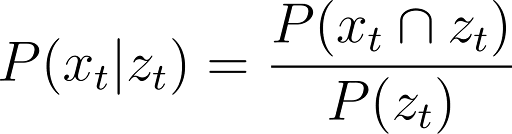

Using these models, we can estimate how the agent moves and what uncertainties are present in the measurement step. In such a setup, the following formula gives the conditional probability for the current state given the measurement.

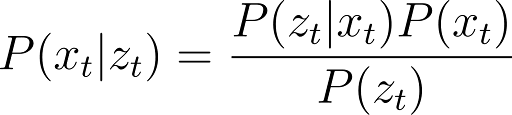

It is the probability of an event happening given prior knowledge we already have, which means scaled by the knowledge. However, the careful reader might say that we cannot easily calculate the numerator P(xt∩zt) because that event means that both state and measurements are true. We just don't know the robot's path beforehand. And that is a good observation. More often in robotics, one can find another formulation for the conditional probability that is one of the most famous mathematical theorems - Bayes's Theorem. It tells us that some probabilities might be derived from other conditional probabilities of independent events. Using such a thought process, we end up with the following equation.

Bayes's Theorem in robotics lets us estimate the current state of the robot given uncertain measurements. It is an online process that iteratively works while the robot operates.

For the localisation assignment, we can also use other techniques. For example, when the system has a camera on board, we can utilise another popular state estimation technique, which is bundle adjustment. However, localisation is a very broad topic and let's not cloud the picture but move to the mapping process.

A mapping process starts with a question about the map itself - what is a map? Or better - what is a good map? What map do we need to operate autonomously? These application-specific questions must be answered before we proceed to implement SLAM. Maps represent the environment's topology, capturing the most important information from the robot's perspective. However, the maps' taxonomy in SLAM is another broad subfield. We can define static environments or dynamic environments. We can have only one robot or an entire fleet that simultaneously gathers data. Still, the following article tries to distil the most relevant information about SLAM, so let’s split maps into two intuitive categories: volumetric and feature-based.

A point cloud is a common sensor data type used in SLAM. Maps created using point clouds represent a 3D volume of the environment and primarily contain its geometry. An agent navigating through such a map would localise itself using geometrical cues, iteratively comparing measurements from its sensors (e.g., LiDAR’s scans) with the map. The other approach is represented by feature-based maps. These, in turn, contain some landmarks that are easily recognisable by the robotic agent. They can be any distinct place in the environment, e.g., visual descriptors at key points found in the camera image. However, feature-based maps always need an extra step to extract these landmarks.

The Iterative Closest Point algorithm used in the online map-building pipeline. Based on the work and their GitHub.

Mapping is a process of fusing current knowledge with new measurements. It can be achieved in multiple ways, but let's focus on geometrical maps. Assume we have a robot equipped with LiDAR, and we want to map the unknown environment. As the robot moves, new scans shift towards the robot's motion, but at the same time, we have to integrate them into one common map. Remember that our measurements are noisy? That is why a simple concatenation of scans will give us a very poor result. A common technique in SLAM is the Iterative Closest Point (ICP) algorithm to align consecutive point clouds. Broadly speaking, it consists of an iterative process of associating measurement with the existing map and transforming it to align it by minimising distances between associated points in both point clouds. It has many variations, but all of them follow the same structure.

After a brief introduction to localisation and mapping, we are finally ready to define the SLAM problem. Mathematically let's consider it as a probability of a series of robot states (x0:t) and a map (m) given observations (z1:t) and control commands (u1:t). In other words, we want to obtain robot states and an environment representation based on how it was moving and what it saw with its sensors. Such a problem is known as a Full SLAM, i.e., we estimate the most probable path from the start of a robot given all measurements and controls to the current moment. We can define it by the following formula for the probability distribution.

On the other hand, when we are interested only in the current pose of a robotic agent, not the whole path, then the problem is called an Online SLAM. It is solved iteratively, one at a time and based on marginalising previous poses. In such a scenario, the Full SLAM probability distribution formula reduces to the following one.

Generally speaking, the SLAM problem is hard to crack. As the robot moves, its localisation uncertainty increases in both cases, online and offline, which is the root cause of many difficulties. As there is a correlation between observing landmarks and motion, those observations integrate more and more errors of localisation and sensor noise in the map. Additionally, picking the wrong data associations might completely destroy the mapping process because the robot would end up in the wrong place, causing some dangerous situations. The smaller the robot poses uncertainty, the better the data association. That is why it is so important to thoroughly prepare both software and hardware.

Simultaneous Localisation and Mapping is one of the most important issues regarding automated robots, a solution without which they could not fulfil their tasks. Undoubtedly, this is a highly technological challenge, so it is worth making the greatest effort to approach it with expert knowledge. Many organisations, even with an in-house team of programmers, prefer to entrust software development to experienced specialists. Although it's difficult, the possibilities offered by autonomous robots cannot be overestimated, and a well-prepared and implemented solution can be a real revolution for an organisation. If you would like to consult on autonomous robots, SLAM or other high-tech solutions, please contact us. Our team of experts will certainly be able to help you.

And don’t be a stranger at Flyps blog! In the next post from the SLAM series, we'll review some of the SLAM algorithms that use deep learning and more classical ones. Stay tuned!

See our tech insights

Like our satisfied customers from many industries