High-Tech Software House

(5.0)

The paper received the best paper award at the International Conference on Robotics and Automation (ICRA) 2022, held in Philadelphia, USA.

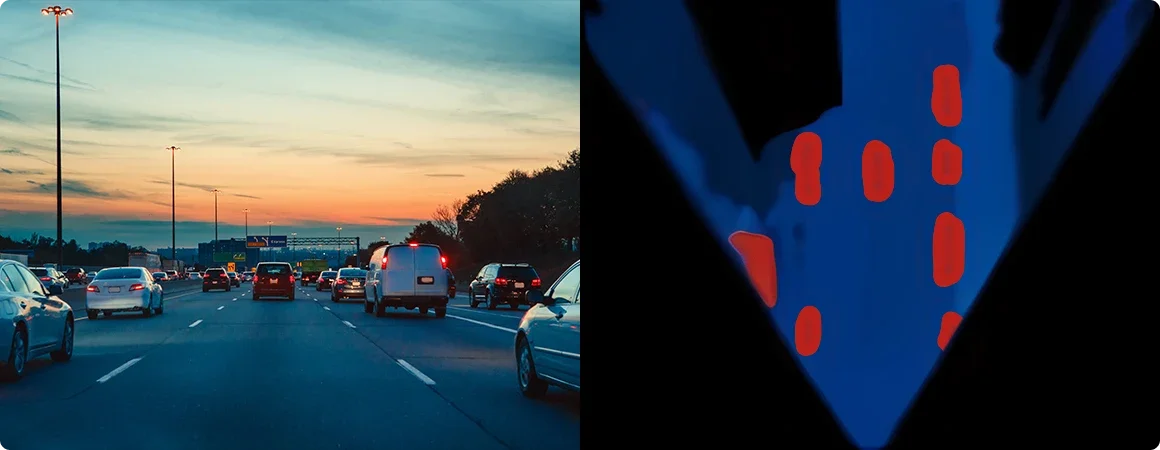

The following paper tackles the problem of an online generation of bird's-eye-view (BEV) semantically-labelled maps from monocular camera images. The concern is paramount for autonomous driving because BEV maps are commonly used for safe navigation. The novelty comes from formulating the mapping task as finding correspondences between columns of pixels and rays on BEV maps using deep learning models.

The authors noticed that such a task has the same structure as well-known sequence-to-sequence problems in Natural Language Processing (NLP). Such a model translates one sequence of words in some language to another sequence in another language. This formulation allowed for using Transformer-based models with an attention mechanism that appeared to be highly useful.

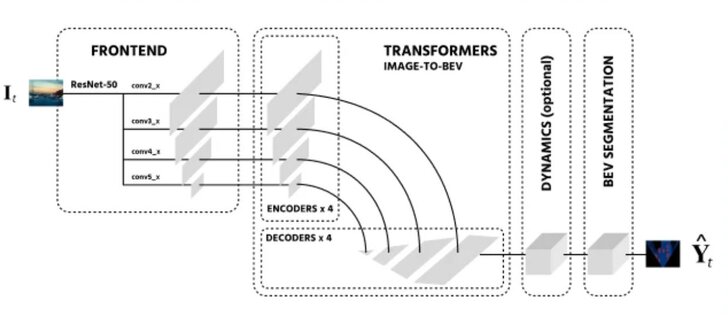

The proposed pipeline consists of three main parts.

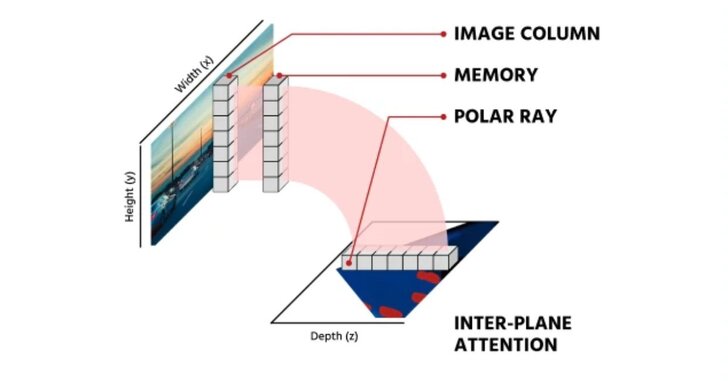

The number of pixels in the image is significantly larger than the number of words in typical sentences. Thus the number of candidates for attention on the 2D image can be enormous. Hence, to avoid computational exhaust, the authors proposed constraining the attention mechanism only to columns of pixels translated into rays on the BEV map.

On the other hand, using only one column as input to the Transformer model could be troublesome because such a block contains only little spatial information along one axis. To avoid that issue and increase the context of these pixel columns, convolutional ResNet-50 blocks called the Frontend preprocess raw images into pixel features, and columns from these features are passed to the Transformer model.

Moreover, constructed embeddings also encode information about a scene's semantics and depth apart from the positions of pixels. The persistence of that knowledge through the whole pipeline remains crucial, as semantic segmentation is at the top of it.

In general, transformers gained significant attention in Natural Language Processing, widely used for sequence-to-sequence tasks, such as sentence translation. A typical mechanism in transformers is attention. During training, it learns to which parts of the input it should attend more., i.e., which of them are more relevant from a task's perspective? The Transformer model transforms input sequences (representing a flat image) into another line of features representing BEV maps. All in all, the result is just a vector of features that still need to be translated into an actual BEV map.

The authors placed a convolutional encoder at the top of the proposed pipeline, which produces semantic occupancy maps. Unlike the 1D contextual reasoning of attention modules, that block involves 2D convolutional kernels. They have a broader backdrop that improves finding correspondences along the x-axis of a feature map and decreases discontinuities between polar rays of a resulting BEV map.

The authors tested their method on three large-scale public datasets for autonomous driving - nuScenes, Argoverse, and Lyft. The proposed architecture produces BEV feature maps from the transformer decoder that have a resolution of 100x100 pixels, and each pixel represents 0.5m2. Moreover, the architecture includes a specialized component based on axial attention that merges the spatial and temporal information from previous frames to build the current BEV feature map. While training, the model takes a series of 4 images at a frequency 6Hz and predicts the last of them.

Typically ablation studies in the machine learning field elaborate on how individual learnable components influence the predictions of a system. The authors proposed to verify that through three separate experiments, and there are three main conclusions::

The spatiotemporal attention-based model outperformed current state-of-the-art approaches in the segmentation of objects in the BEV maps - especially in comparison with the best performing (and also made by the same group) the previous method called STA-S. The improvement is especially visible for dynamic classes, such as buses, trucks, trailers, and barriers. The mean Intersection over Union (IoU) improved relatively by 38% for these classes on the nuScenes dataset. For the Agroverse and Lyft datasets, the experiments yielded similar numbers. However, the comparison using the Lyft dataset was not directly possible due to the lack of a train/test split.

The paper "Bird's-eye-view Semantic Mapping with Spatiotemporal Attention" tackles the problem of creating bird's-eye-view (BEV) maps from monocular camera images. The paper's novelty comes from formulating the mapping task as finding correspondences between columns of pixels and rays on BEV maps using deep learning models. The proposed pipeline consists of three main parts: image representation, plane transformation, and semantic segmentation. The authors tested their method on three large-scale public datasets for autonomous driving - nuScenes, Argoverse, and Lyft. The ablation studies showed that the direction of attention in the image matters and that incorporating information about polar coordinates in the transformer encoder or decoder improves the model's performance.

The group won the best paper award at the International Conference on Robotics and Automation (ICRA) 2022, which is thoroughly deserved. The novelty of a simple yet effective technique and a thorough comparison with state-of-the-art approaches is admirable.

We are curious to hear your opinion. Have you come into contact with this topic in your current project? Please share your feedback with us. If you have any questions, please feel free to contact us.

The graphics in this article were created with images from www and doc.

See our tech insights

Like our satisfied customers from many industries